TechTalk Daily

By: Daniel W. Rasmus for Serious Insights

At the Apple World-Wide Developers Conference today, the company showed a bevy of new features across its platforms following a commercial for its Apple TV+ streamer. I’m sure, like me, many of those watching the WWDC live stream from Tim Cook and the team kept waiting for AI.

Many of the new features demonstrated machine learning, like e-mail categorization, but not generative AI. After all those walk-throughs, Cook returned with one more thing without saying that iconic phrase out loud. It was perhaps the biggest software “one more thing” in Apple history.

Apple dropped their AI offering, which, not surprisingly, swapped out the word “artificial” for “Apple.” Thus, Apple Intelligence.

[Read the Apple press release here].

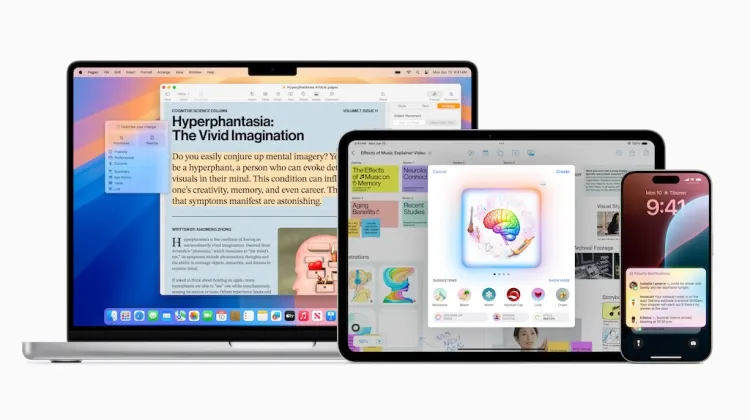

AI features shared at WWDC 2024 include:

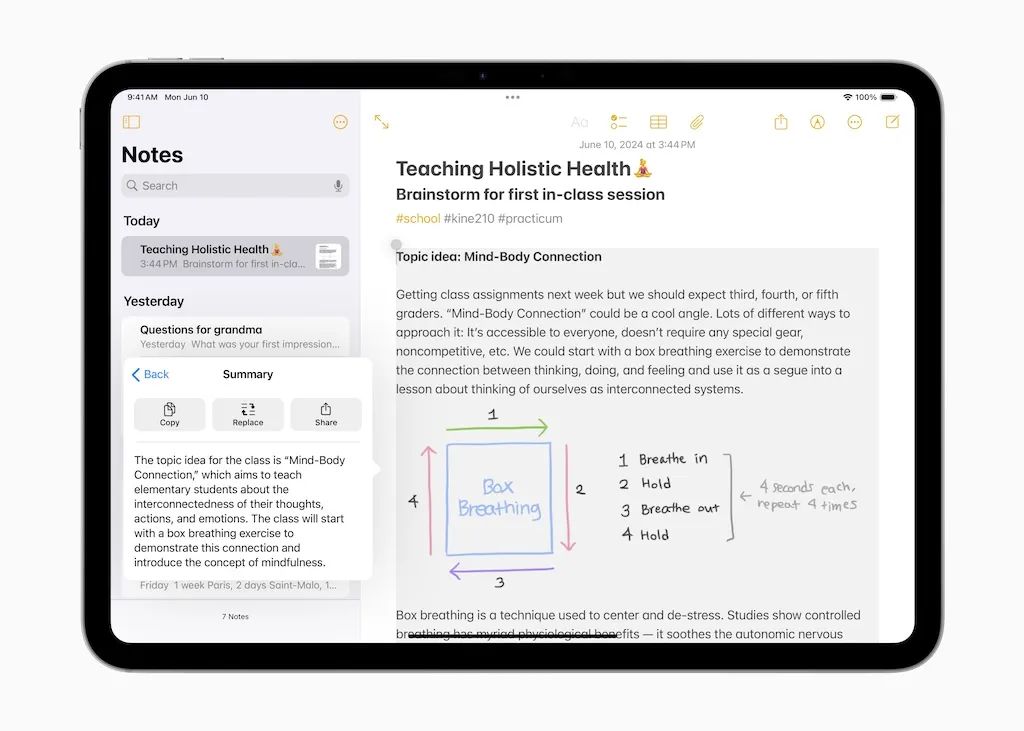

Writing Tools. For rewriting, proofreading and summarizing text. Apple will implement the writing tools as system-level services, available across Mail, Notes, Pages and in third-party apps. I once asked Steve Ballmer why Microsoft had not implemented a spellchecker in Windows (given that many HDs at the time held multiple calendars for app-specific spellchecking). His response: “No one needs a spellchecker in a browser.” Well, now they do. And in almost every other app. Writing tools will provide thread summaries in e-mail, not just summaries on individual e-mails.

Priority Notifications. These will also be managed by Apple Intelligence, ensuring that the most important ones appear.

iPhone Audio Transcription and Summarization. The iPhone will become a professional conferencing system with the ability to record, transcribe and summarize audio. However, I don’t believe FaceTime calls were included. Those features can’t be far behind. Everyone on a call will be notified when the recording starts. This is not an AI feature but a smart transparency choice.

Image Playground. Write a note. Circle a blank space using the Image Wand, and Image Playground will extract information from the note page to inspire an illustration. That is an example of thoughtful teams at Apple taking the time to figure out how to embed AI without shouting its presence in the UI. Images will be created on the device and can be rendered as animation, sketches or illustrations. The feature will also transform sketches into more finished artwork. Image Playground may be the end of many clipart services.

Image Playground will even use an image from the owner’s personal library to create a rendering of a friend or family member. I will not be surprised if Apple doesn’t have some privacy discussions around this feature as people challenge what can be done with their likeness.

Genmojis will handle smaller images by creating custom emojis—so much for the emoji committee. Apple devices will be capable of crafting custom emojis that include likenesses of the sender or receiver with just about anything you can imagine tacked on to them. Dan wearing a Dodger cap? Sure. Janet wearing aviators? Why not? Genmojis will be available as emojis, tapbacks and stickers.

No one will be surprised that Apple is incorporating more AI. With Samsung and Google already in the AI photo game, the third space for photos, Apple’s Photos, now facilitates the removal of people and objects that mar an image. Generative AI can be used to create a memory movie from online photos. As the generative organization of images becomes more sophisticated, I can imagine a hit to montage makers for weddings and bar mitzvahs.

Siri reimagined. So far, these features are not Siri-based, meaning that AI has become infused within apps and experiences. Siri is no longer the primary entry point for Apple Intelligence. That said, Apple did not forget Siri. Siri now acts as a proper assistant. And like the other Apple Intelligence features, its glowing orb disappears, becoming a flash across the display.

While Siri becomes more invisible, what it can see expands. Siri will now be aware of previously seen content and the current content on the display. Phrases like “Bring up that article I was reading on backpropagation,” and the article will appear; “Summarize the web page” should also work.

The most important feature isn’t the local LLM but the idea of Personal Intelligence. This semantic index creates a representation of the owner through relationships between data types and an understanding of what those data types mean to each other. Today, Siri can’t handle the query, “When is Mom’s flight landing?” Current Siri returns a web search that includes mothers on TikTok talking about plane landings. It’s not very helpful for those picking up Mom from the airport.

With the semantic index, Apple devices will recognize data like who “Mom” is and find e-mails from her or saved files with today’s date and flight information. Follow-up queries like “How long will it take to pick up Mom?” will also likely work, recognizing that Mom is at a particular airport and where the device’s owner is at the moment.

Interestingly, Microsoft’s AI Recall + Copilot seeks to do many of the same things that Apple discussed. Apple, however, shared the semantic index in support of end-user experiences with repeated shoutouts to privacy. Microsoft already saw a backlash because it doesn’t learn from its own history. Recall suffered from the same botched marketing as the initial Viva launch: a lack of user and privacy first design and messaging. Apple, on the other hand, touted privacy, and they did so in the context of selling an idea that won’t hit devices for several months, giving them time to set and adjust expectations.

Apple also gets that basic generative AI, words and images, are already table stakes. In many ways, generative AI already verges on boring for some, and for others, it creates new friction as people seek free ways to leverage the tools through clipboards and files rather than expensive subscriptions. Generative AI, while it still has a wild side, is good enough to support most commercial writing scenarios and, with some caution (like adjusting for abhorrent spelling), is pretty good for illustration.

The current state of AI isn’t good enough, and Apple knows that. Real AI needs to understand its users and act on their behalf, understand intent, and find a way to work with data to fulfill that intent. None of the AI systems can do that yet. Apple promised personal intelligence-informed collaboration today at WWDC.

OpenAI can’t move the needle without Apple or Microsoft because they don’t own the data or the devices. Apple’s local-first approach offers the best of both worlds: proprietary, device and data-aware applications and the choice to go beyond.

ChatGPT integration. Initially, that choice to go beyond on-device processing involves a conscious decision to engage with OpenAI’s ChatGPT. Regardless of the LLM model compression, Apple can’t fit all the world’s knowledge onto a device. Apple chose ChatGPT integration, but not without asking first. Apple claims not to send anything beyond the query to OpenAI’s servers. The privacy-first model extends to partitioning queries sent to third-party services.

The outreach to ChatGPT does not require a subscription, although those with a subscription can incorporate advanced features into their ask.

None of these features are available today on any Apple device except for developers. Public betas will begin soon, giving regular users the ability to test them. They won’t be fully baked until this fall, and some will likely arrive well after that. I highly encourage readers to explore the public betas on a secondary device.

Learn what you can and give feedback. That’s what betas are for. Don’t jump onto forums to complain or rant. Send thoughtful feedback on the experience to Apple through the mechanisms provided in the betas.

To find out more about Apple Intelligence and the implications for the market, read the rest of the article: Apple Intelligence Initial Analysis: Apple Shows the Market How AI Should Be Done on Devices

About the author:

Daniel W. Rasmus, the author of Listening to the Future, is a strategist and industry analyst who has helped clients put their future in context. Rasmus uses scenarios to analyze trends in society, technology, economics, the environment, and politics in order to discover implications used to develop and refine products, services, and experiences. He leverages this work and methodology for content development, workshops, and for professional development.

Interested in AI? Check here to see what TechTalk AI Impact events are happening in your area. Also, be sure to check out all upcoming TechTalk Events here.